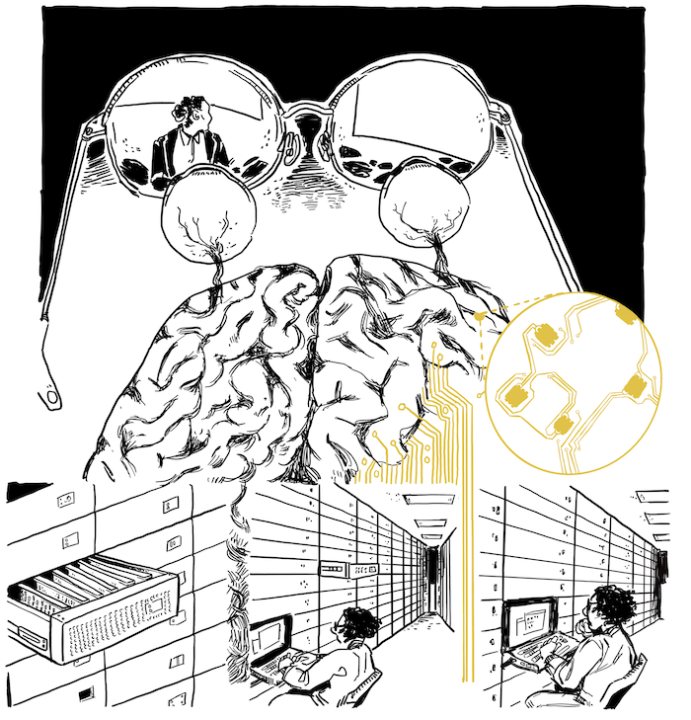

The Institute of Neuroscience (ION) is a group of biologists, psychologists, mathematicians, and human physiologists at the University of Oregon that has pooled its expertise to tackle fundamental questions in neuroscience – questions such as:

- How do neural circuits produce behavior?

- What can computational approaches tell us about how the brain operates?

- How do neural stem cells choose between self-renewal and differentiation?

- What mechanisms generate the large diversity of neurons within the brain?

- How do these neurons 'wire up' into functional circuits?

- What are the circuits of reward, addiction, memory, and cognitive flexibility?

Judith Eisen was awarded the George Steisinger Award from the International Zebrafish Society. This award recognizes Dr. Eisen's long track record of pioneering research in developmental neurobiology and her innovative ongoing research program to understand host-microbe interactions and their impact on the nervous system.

Emily Sylwestrak is awarded a Klingenstein-Simons Fellowship Award in Neuroscience. "The Klingenstein-Simons award gives recognition to outstanding scientists who have made valuable contributions in their early research efforts, and who show the greatest promise for a successful research career."

The McCormick and Berkman Labs receive a competitive grant from the Tiny Blue Dot Foundation to examine the neural and psychological effects of undergraduate course instruction in positive life engagement.

The Miller Lab reveals role of important proteins in control of electrical synapses. Learn more in Around the O and Current Biology.

The Lockery Lab asks the question, "Do C. elegans get the munchies too?" Learn more in Nature, and Current Biology

"UO neuroscientists look deep into the eyes of the octopus" features work by the Cris Niell Lab in Around the O.

Chuck Kimmel is elected to the National Academy! Charles Kimmel is responsible for establishing the zebrafish (Danio rerio) as a standard laboratory model organism that is used prominently in academic laboratories and industries around the world to study the genetic mechanisms underlying vertebrate development and function, including human diseases. His research and mentorship of legions of colleagues and gifted young scientists resulted in a fundamental transformation in the biological sciences, the importance of which cannot be overstated.

"Researchers predict rat behaviors from brain activity" features our recent article published in Neuron by the Mazzucato lab on the Around the O.

ION boasts a highly collaborative faculty with expertise in genetics, development, electrophysiology, optogenetics, functional imaging, computational modeling, and theory. As a result, students enrolled in our PhD program come away with the broad conceptual and technical skills necessary to be an independent and successful scientist. Our state-of-the-art facilities and excellent support staff allow ION members to progress rapidly from exploratory and pilot experiments to rigorous testing of novel hypotheses.

We're passionate about creating an inclusive environment that promotes and values diversity. Research institutions that are diverse in gender, gender identity, race, sexual orientation, ethnicity, age, and perspective are proven to foster greater creativity and promote synergistic, collaborative innovation and interactions. We stand in solidarity with all of those seeking opportunity and freedom from prejudice and bias. In recognition of the significant problems in the US and elsewhere for underrepresented minorities in STEM fields, we vow to contribute towards a better future.

A longstanding area of research excellence and a popular new major, neuroscience takes center stage at the University of Oregon

Trainee Awards